TabPFN: The AI Revolution for Tabular Data

Predictive LLMs: Scalability, Reproducibility & DeepSeek

Evaluation of the Election Forecast 2025

Predictive LLMs: Can open source models outperform OpenAI when it comes to price forecasts?

Introducing the kafka R Package

XGBoost vs. LLMs for Predictive Analytics

Optimization of Signal Detection Using Machine Learning

Predictive LLMs: Can GPT-3.5 enhance XGBoost predictions?

Business Case: ESG Reporting Platform

Demystifying Encoding in MariaDB/MySQL: Tips to prevent Data Nightmares

Business Case: Real-time fraud detection platform

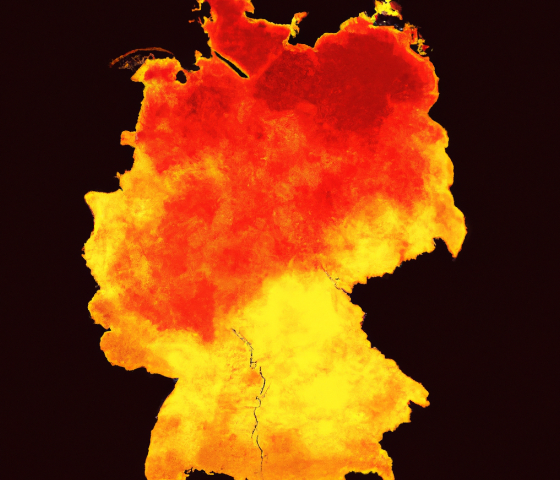

Business Case: Bayesian forecast model for the German federal elections

Business Case: Customized stack for automated air pollutant forecasting in Berlin

Refactoring: An Introduction

Success Factors for Data Science Projects

Measure, compare, optimize: data-driven decisions with A/B testing

Interpretability of AI Models with XAI

Who’s best in class? Comparing forecasting models with a Predictive Analytics Cube (PAC)

White Paper: Customer Segmentation

Automated Excel Reports with Python

White Paper: Customer Lifetime Value

The World of Containers: Introduction to Docker

Pandas DataFrame Validation with Pydantic - Part 2

Pandas DataFrame Validation with Pydantic

Understand Customer Decision Making: Discrete Choice Models with RStan

Code performance in R: Working with large datasets

Code performance in R: Parallelization

How to Automate a Website Image Crawler Twitter Bot

Code performance in R: How to make code faster

Code performance in R: Which part of the code is slow?

Understand customer decision making: Discrete choice models in marketing

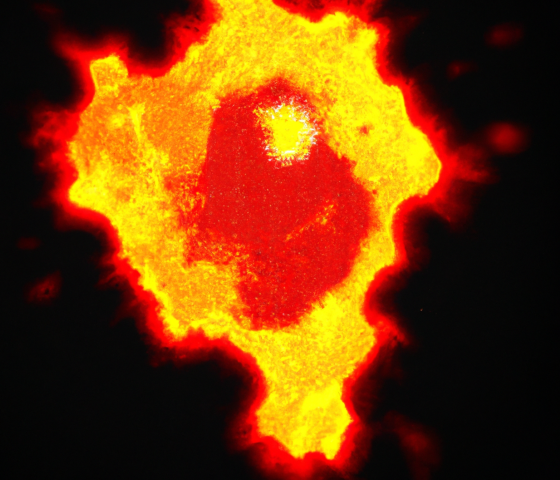

The Representation of Corona Incidence Figures in Space and Time

COVID-19: Heat Map of Local 7-day Incidences over Time

White Paper: Attribution 2.0

Reflecting on 2020 US Election Forecasts: 10 Takeaways for Data Scientists

Churn Analysis for Attracting and Retaining High-Value Customers

Python Christmas Decoration

Protecting Your Database from SQL Injections

Reinforcement Learning for Marketing: Lessons and Challenges

Continuous Integration: Introduction to Jenkins

Continuous Integration: What it is, Why it Matters, and Tools to Get Started

Understanding and Handling Missing Data

shinyMatrix - Matrix Input for Shiny Apps

Building a Strong Data Science Team from the Ground Up

Data Visualization in R vs. Python

Debugging in R: How to Easily and Efficiently Conquer Errors in Your Code

Marketing Mix Modeling - How Does Advertising Really Work?

Multi-Armed Bandits as an A/B Testing Solution

Data Quality and the Importance of Data Stewardship

Best Practice: Development of Robust Shiny Dashboards as R Packages

What's the Best Statistical Software? A Comparison of R, Python, SAS, SPSS and STATA

Shiny Modules

Best Practice in TV Tracking: Why a Simple Baseline Correction Falls Short!

rsync as R package

Using Modules in R

ggCorpIdent: Stylize ggplot2 Graphics in Your Corporate Design

R Markdown Template for Business Reports

Cluster Analysis - Part 2: Hands On

Cluster Analysis - Part 1: Introduction

Optimize your R Code using Memoization

Introducing the Kernel Heaping Package III

Do GPU-based Basic Linear Algebra Subprograms (BLAS) improve the performance of standard modeling techniques in R?

Introducing the Kernelheaping Package II

Prediction: Who will win the 2018 World Cup?

Design Patterns in R

smoothScatter with ggplot2

Introducing the Kernelheaping Package

INWT's guidelines for R code

Business Case: Predictive Customer Journey (PCJ)

Business Case: Spillover Analysis

A Not So Simple Bar Plot Example Using ggplot2

Business Case: Customer Segmentation

Business Case: Spatial Data Visualization

Promises and Closures in R

Plane Crash Data - Part 2: Google Maps Geocoding API Request

Plane Crash Data - Part 3: Visualisation

A meaningful file structure for R projects

Plane Crash Data - Part 1: Web Scraping

Who will win the 2017 Bundestag election?

MariaDB monitor

100 grams of Lego, please.

Business Case: Quality Index

Business Case: Attribution 2.0

Business case: Staff Planning

Business Case: Customer Lifetime Value (CLV)

Business Case: TV Impact